Nvidia RTX 2050 Coming To Gaming Laptops in 2022 – But Why?

Nvidia has announced their RTX 2050 graphics for gaming laptops coming in Spring 2022, but why? The 20 series for laptops was first announced nearly 3 years ago at CES 2019 – let’s discuss.

Ray Tracing?

The strangest part to me is the RTX branding. Considering the specs of the 2050 compared to the 2060 above it, well, ray tracing is probably going to be a pretty rough time in most games. Personally, I didn’t find the mobile 2060 to do a great job in this area (without DLSS).

I can’t imagine the 2050 doing particularly well here. It will of course depend on the specific game and RT setting levels. It will probably be fine for some subtle less important RT effects, but definitely not enough for the full blown ray tracing experience Nvidia has been selling us for the past few years since the introduction of “RTX”.

At Least it has DLSS

Fortunately, there’s more to “RTX” graphics than just RT, as the RTX 2050 will also offer DLSS support. This seems to be the biggest improvement on offer, as fact is, DLSS is now present in a lot of popular games and does work well.

Of course AMD’s FSR is starting to roll out in more popular games, but there’s no ignoring that Nvidia has already been working on DLSS for many years now.

Missing Features

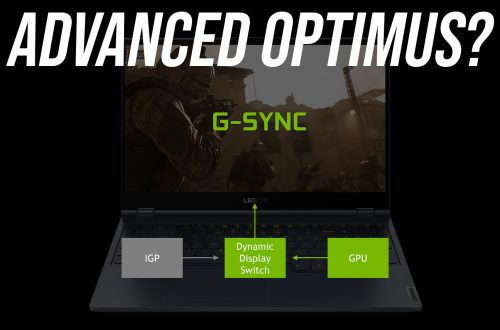

There are some other strange things on the spec sheet, like the 2050 not offering G-Sync support, but whatever if it’s meant to be a budget friendly option. A Laptop OEM probably isn’t going to spend more money and pair a G-Sync capable display with lower end hardware anyway.

The spec sheet also lists “N/A” for HDMI and DisplayPort support. Presumably this means no direct port connection to the discrete GPU I.e. no way of bypassing optimus for an FPS boost.

Is RTX 2050 Turing or Ampere?

The 2050 naming is a bit weird too, as apparently it’s still GA107 / Ampere silicon, not Turing. My guess is 3050 dies that didn’t make the bin, given the 2050 and 3050 have the same CUDA core count.

I’m assuming Nvidia didn’t want to go with a 3040 name or something and taint the newer 30 series lineup with something so low.

Strange Announcement Timing

It’s kind of strange that the announcement was on a Friday before many tech media outlets shutdown for Christmas break. I’m guessing Nvidia has a different focus for their CES event in just 2 weeks time.

Here’s Where The 2050 May Do Well

If the goal of the RTX 2050 is simply to replace the GTX 1650 as the entry level gaming GPU and it ends up performing similarly (ideally better!) for similar entry level money, then I’ve got no real problems with it. Based purely on the specs, the 2050 should perform a bit better, but also offer the advantage of DLSS.

I’m not too concerned by the 4GB of GDDR6 memory. The RTX 2050 is clearly aimed towards eSports games or otherwise modern games at lower setting levels based on the specs, you won’t be playing most titles at higher settings anyway, let alone above 1080p.

What I’m most concerned about is the memory bus and memory bandwidth being lower than the GTX 1650. It’s yet to be seen how this will affect performance in different games, I look forward to providing benchmarks of the 2050 once it’s available in early 2022.

More RTX 2050 details in my recent video here: